Software Quality Assurance & Your ETRM Implementation Project

Energy trading and risk management (ETRM) systems provide sophisticated features and functionality that not only support the entire origination-to-settlement transaction life cycle but also provide complex calculations for reporting (e.g., WACOG, MtM). In addition, ETRM solutions are rarely implemented in a stand-alone fashion but are usually tightly integrated into a heterogeneous systems environment.

The characteristics of a rich feature set and extensive integration lead to increased implementation risk and pose a special challenge from a software quality assurance (QA) perspective. Developing a risk-informed testing strategy early in the project life cycle will improve delivered solution quality without inflating implementation costs.

MORE: What You Need To Know Before Starting An ETRM System Implementation Process

Purpose & Objective Of Testing

The primary objective of software testing is to provide an objective evaluation of the quality of a particular component or components being reviewed and to surface examples of non-performance – called defects. The process of testing can be broadly considered to be seeking to validate and verify that the ETRM solution and associated integration:

- Meets the needs of the business and IT stakeholders.

- Consistently performs as expected.

- Is ready for productive deployment.

To evaluate against this high-level set of standards several different types of testing can be utilized (e.g., installation testing, regression testing). Best practice guides for software testing target the process of assessing custom code so many of the recommendations need not necessarily be applied to a commercial off-the-shelf solution like an ETRM. Part of what companies pay for when they purchase third-party software is a foundational level of product QA performed before it’s ever turned over to the customer for use.

A Risk-Based Approach To A Testing Strategy

A quick glance at a software QA textbook, a testing whitepaper, or even Wikipedia reveals an almost dizzying array of testing approaches, levels, and types. Spending time studying them in more detail might convince the reader that all serve an important purpose and should be incorporated into any IT project to cover all the bases, but that simply wouldn’t be affordable.

MORE: Is Now The Right Time To Rethink Your Enterprise Software Strategy?

Quality can be built into the integrated solution in many ways from the outset of the project, and the testing strategy should clearly define which methods will be employed to do so. Conversely, it should specify which will not…scope creep can take many forms and testing is often one of them. How, then, can it be decided which testing activities should be included?

At its core, and in this context, software QA is about reducing the risk that, after going live, the solution fails in a way that has a significant adverse effect on the business. Every organization’s appetite for risk is different. Below are some key areas of potential failure to consider:

- Direct impact on billing and/or cash flow.

- Lack of visibility to active commodity price risk.

- Inability to manage counterparty/credit risk.

- Failure of key financial control.

- A gap in regulatory compliance.

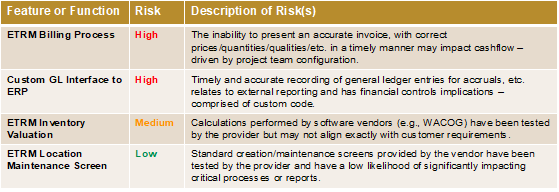

There are obviously other risks that’ll be important to consider for certain organizations. In fact, a company’s enterprise risk matrix and the project’s risk register can both be examined to identify others that should be contemplated. Once these risks have been enumerated, they can be used to inform the “what” and the “how” for the testing strategy. Reviewing the project scope document(s) and the ETRM solution architecture will aid in firming up units and assemblages that need to be tested.

MORE: Aligning The Essential Elements Of Commodity Risk Management

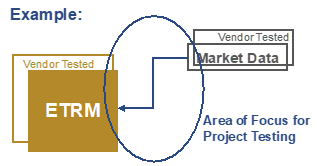

For example, if there’s an interface that brings the latest event dates for crude cargos into the ETRM system, then that would clearly be a component requiring extra scrutiny because it trips the second risk listed above. Moreover, that integration likely includes custom code that will not have been previously tested by the ETRM vendor, thereby increasing the likelihood of defects. In the example depicted below, the integration is potentially to an external source raising the specter of cybersecurity exposure. This is an indication that more methods, levels, and types of testing should be applied, including unit testing, integration testing, and user acceptance (UA) at a minimum. Additional examples include:

The testing strategy should define the tools and methods to be utilized to verify that the system responds correctly. In addition, it’ll outline what needs to be accomplished, how to achieve it, and when each type of test will be performed. The testing strategy shouldn’t be considered in a vacuum and must be aligned with other major project activities such as communication, training, conversion and cutover, technical change control, etc. as part of an integrated delivery approach. The resulting strategy can then serve as a framework for preparing a detailed set of test plans and appropriately incorporate them into the overall project schedule.

Traditional Testing Approaches, Levels & Types

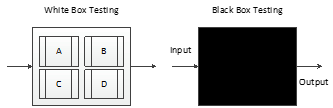

Once project leadership has settled on “what” needs to be tested the next step is to map out and plan for the “how”. While there are several different approaches that can be taken when testing integrated ETRM solutions, a few can be reasonably set aside as they’re focused on more technical aspects of software quality that don’t necessarily address any of the risks discussed previously.

Myriad types of testing exist, each tailored to achieve the verification or validation objectives of an ETRM software testing program in a slightly different way focusing on the quality attributes of an integrated solution. Not all these types of testing will be appropriate for or will be utilized as a part of the test program for an ETRM project. Also, these types of testing procedures span all four primary testing levels (defined below).

One static testing approach that’s very common to ETRM system implementations takes the form of walk-throughs, often referred to as scenario modeling. This approach couples well with dynamic testing approaches that leverage predefined test cases applied against technical objects like interfaces to round out a more complete software QA program.

Generally, the testing levels applied to ETRM system projects consist of the following:

- Unit Testing (generally, white box)

- Integration Testing

- System Testing

- Acceptance Testing

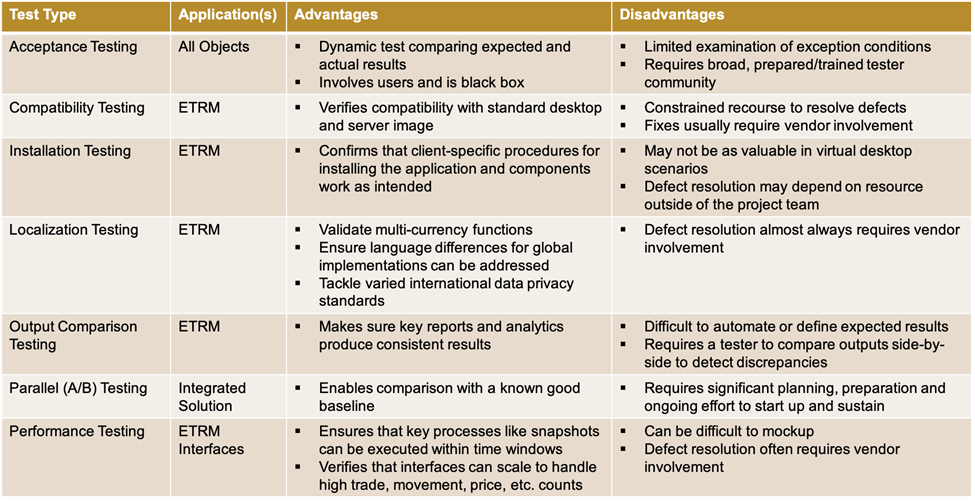

The menu of available test types is extensive, but the table below aims to highlight some additions that are well suited to testing ETRM systems and associated integration in a more traditional software delivery construct (waterfall vs. Agile).

Several test types aren’t applicable in this scenario such as alpha, beta, concurrent, conformance or type testing, etc., and can be set aside without significantly influencing solution quality risk.

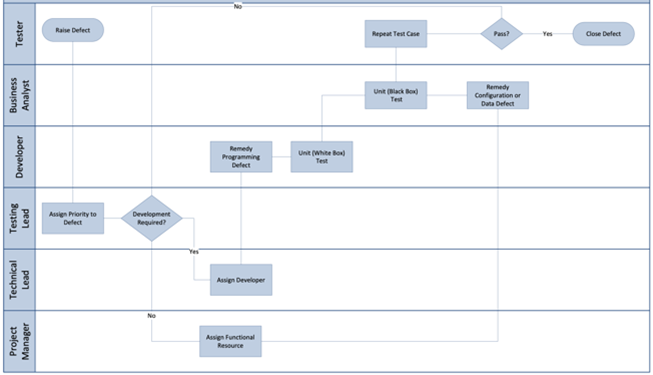

Defect Management

Defects are a normal part of software testing and there are a number of tools that can make logging, tracking, and reporting on them more straightforward for the team and project leadership. However, clear definitions of what defects are (and aren’t) combined with an easy-to-follow scheme for prioritization sometimes take a back seat to tools and processes but can positively contribute to testing outcomes. A defect could be defined as an identified software issue that results in a failure of system performance as compared to the documented, approved system configuration blueprint or functional specification.

One relatively simple way to agree on a rubric for defect prioritization is to align it with the IT department’s existing incident severities since this is something with which most stakeholders will already be familiar. In any event, these levels should serve the purpose of unambiguously determining which issues the project can go live without resolving, which must be remediated before moving to the next level of testing (i.e., entrance/exit criteria), and so on.

Roles & Responsibilities In Testing

For test planning, execution, and associated administration some additional roles typically need to be identified, defined, and ultimately fulfilled by (predominantly) existing project team members. Note that these can either be additional roles to be assigned to positions/individuals within the team or altogether new positions on the project organizational chart, depending on the scope and scale of the project.

- Testing Lead – Given the scope and complexity common to ETRM projects, a dedicated test lead will sometimes be staffed. The testing lead is responsible for creating the overall test strategy, reporting test/defect status, facilitating testing-related meetings, and coordinating activities pertaining to testing with those outside the project.

- Functional Tester – Functional team member(s) responsible for designing and documenting test plans, executing test scripts, documenting results, and logging defects. This role is typically fulfilled by an IT business analyst or functional consultant.

- Technical Tester – A technical team member such as a developer, database administrator (DBA), application security analyst, etc. responsible for unit testing their development components or configuration before releasing them to the functional team for further validation.

- User Tester – A business stakeholder assigned to the project team to execute test plans and evaluate the performance of the system against acceptance criteria. This role is like the functional tester role but is only active during user acceptance testing (UAT) cycles.

- Vendor Liaison – An optional role that can be added on larger projects responsible for logging, tracking, and coordinating issues found in the ETRM product itself with the software vendor.

Some very large projects taking place in highly mature IT organizations may additionally choose to employ the services of a dedicated QA testing team. This can have the advantage of creating scale, allowing for more cycles of testing, as well as creating separation between those responsible for the system configuration/development and those verifying and validating its functioning.

Additional Key Considerations

Other key aspects to QA and a well-rounded testing approach relate to the real business scenarios and data to be used as the basis for testing. While early stages of testing (e.g., unit test) may use mocked-up datasets created by the developer, later stages of testing like integration and UAT should be based on typical business scenarios routinely faced within daily operations and usage of the system.

“Developing a risk-informed testing strategy and investing in the documentation and automation of tests will improve the overall quality of the solution and reduce project delivery risk.”

Utilizing realistic, typical end-to-end business scenarios, along with the underlying business data, will benefit the organization by improving the likelihood of discovering key defects that’ll inevitably occur when the solution is deployed, and ensure that the tests being executed are relatable to the business users who’ll be involved in testing and validation efforts.

Investing in documenting tests, which utilize these business scenarios, will not only improve the quality of the initial solution testing, but it’ll also be beneficial over the long term as they can be leveraged and reused by the organization. Tests can be reused to regression test the solution as new functionality, enhancements, or fixes are introduced into complex integrated solutions. These key business scenarios become the backbone for future testing to ensure that, as the solution matures, no unintended breaks of existing functionality have been introduced.

As more organizations move towards Agile implementation frameworks and DevOps models, the number of deployments per year will increase while the overall complexity of integrated solutions remains. With an increase in deployments and changes to applications, having a library of documented business scenario tests and the underlying datasets will allow these tests to be leveraged continuously and the quality payback for investing in these tests even more beneficial. When tests can be automated, the execution time can be reduced significantly without sacrificing quality for each incremental release.

Conclusion

ETRM systems are some of the most tightly integrated and functionality-rich applications within a business and IT landscape. Developing a risk-informed testing strategy and investing in the documentation and automation of tests will improve the overall quality of the solution and reduce project delivery risk. In the long run, this will also allow for a more stable platform and lower costs to sustain and improve the solution while remaining responsive to continuously evolving demands of trading and risk management.

Related Insights

Our experts are here

for you.

When you choose Opportune, you gain access to seasoned professionals who not only listen to your needs, but who will work hand in hand with you to achieve established goals. With a sense of urgency and a can-do mindset, we focus on taking the steps necessary to create a higher impact and achieve maximum results for your organization.

LeadershipGeneral Contact Form

Looking for expertise in the energy industry? We’ve got you covered.

Find out why the new landmark legislation should provide a much-needed boost for the development of carbon capture.